Protecting Sensitive Data in Event-Sourced Systems with Crypto Shredding

As opposed to more traditional systems where the state is persisted in a database and where CRUD operations are exposed to mutate and read it, in Event Sourcing every single change is recorded as an event within a stream in an append-only fashion and the current state is derived from the event log.

This process is deterministic because the events are immutable. Once an event has been produced, it cannot be modified. Therefore we can trust the event log as the source of truth and use it as often as we want, to create projections extracting the details the events hold into whichever appropriate form fits our needs.

As powerful as this concept is, it doesn't come without some challenges. For instance, let's think of a situation where our system stores some sensitive data such as personal identifiable information and a user exercises its right to be forgotten.

In traditional systems that use CRUD operations in databases, a row or document could be updated or deleted by anyone with access to it and the sensitive information could be masked or deleted when decided. What may seem as a flexible mechanism could also mean opening the door to data loss situations (i.e. update and delete operations destroy information) and a mismatch between the expected current state vs the actual current state.

On the other hand, event-sourced systems follow a zero data loss approach. If a persisted event cannot be modified, why not provide a mechanism to make information illegible at will?

Crypto Shredding

This is the reasoning behind a technique called crypto shredding. The sensitive data is encrypted with a symmetric algorithm inside the events. The information can be retrieved at any time and, as long as the private key is available, it can be decrypted. When we decide to make this information illegible, we simply lose the private key and the information will be unable to be decrypted again.

I remember hearing about some unfortunate people, owning millions worth of cryptocurrency, who are desperate because they had lost their private encryption key could not access their wallets anymore. The whole point of passwords or private keys is to protect access to something; lose the key, and you won't be able to access it anymore, even if it's still physically there.

Copy and Replace is Always an Option

Crypto shredding makes sense for new systems that are to be developed with Event Sourcing, but what if we already have an existing event-sourced system where some of the persisted events possess personal data?

In that case, one thing we could do is to prepare some algorithm to read all the existing events in the event store and map them to equivalent ones with their personal data encrypted and a way to associate an encryption key to each one of the different personal data owners. It's a copy and replace process.

This does not need to be a perfectly written code, as it will hopefully be used once. Each of the new event produced will be stored in a different event store and, once everything is working as before but with the new crypto shredding approach, the old event store can be removed. Special care must be taken before that, of course.

The different read models and projections can be regenerated out of the new event streams. If everything was done properly, the projections should look exactly the same as before up until the same point in time.

Implementing Crypto Shredding

Behind this simple idea there are some technical challenges and some decisions to make. For example:

- Identify sensitive data in an event

- Associate sensitive data to a subject

- Store private encryption keys

- Get rid of the private encryption key when desired

- Cryptographic algorithm to use when encrypting and decrypting

- Encrypt text and other data types

- Upstream serialization with encryption mechanism

- Decrypt text and masking mechanism when it cannot be decrypted

- Downstream deserialization with decryption mechanism

- Wire up together with an EventStoreDB gRPC client

- Test everything with an event store.

These issues need to be tackled at some point, and a good way to go through them is to do so with examples. I'll show you a possible solution using C# and a Newtonsoft.Json serialisation library. The idea is valid for other programming languages and serialization libraries also. At the last part I'll show you how to wire things up with a .NET EventStoreDB gRPC client and demonstrate its use with EventStore running as a docker container.

Identify Sensitive Data in an Event

Only some events would contain personal identifiable information and we need a mechanism to detect it. When defining the events it should be clear which fields would contain data to encrypt, and attributes can help with it.

Create an attribute to be used as [PersonalData] later on.

public class PersonalDataAttribute

: Attribute

{

}Associate Sensitive Data to a Subject

The [PersonalData] attribute can identify which field to encrypt, but in order to know which encryption key to use when encrypting we need a way to associate personal identifiable information properties with a specific person. We will refer to this with the term data subject Id.

Create another attribute to be used as [DataSubjectId]

public class DataSubjectIdAttribute

: Attribute

{

}Now we could create our events and clearly define which fields need to be protected and for whom.

Let's assume we have a marker interface to be used in our events. This is optional, but I will be basing my code on its existence.

public interface IEvent

{

}Consider the following example where there is an event ContactAdded that contains information about a person.

public class ContactAdded

: IEvent

{

public Guid AggregateId { get; set; }

[DataSubjectId]

public Guid PersonId { get; set; }

[PersonalData]

public string Name { get; set; }

[PersonalData]

public DateTime Birthday { get; set; }

public Address Address { get; set; } = new Address();

}

public class Address

{

[PersonalData]

public string Street { get; set; }

[PersonalData]

public int Number { get; set; }

public string CountryCode { get; set; }

}All the properties of various types to be protected carry the [PersonalData] attribute, including those within a complex object Address. The text field CountryCode has been intentionally left unprotected.

Also, there is a Guid PersonId that has been marked with the [DataSubjectId] attribute because it uniquely identifies the person who owns the sensitive data and the one that can decide to be forgotten.

We will see later where to place the parsing logic that detects these attributes, but for now keep in mind that we could easily know whether an event contains data to be encrypted or not by looking at whether it contains the [DataSubjectId] attribute. We will be using the following method to do that.

private string GetDataSubjectId(IEvent @event)

{

var eventType = @event.GetType();

var properties = eventType.GetProperties();

var dataSubjectIdPropertyInfo =

properties

.FirstOrDefault(x => x.GetCustomAttributes(typeof(DataSubjectIdAttribute), false)

.Any(y => y is DataSubjectIdAttribute));

if (dataSubjectIdPropertyInfo is null)

{

return null;

}

var value = dataSubjectIdPropertyInfo.GetValue(@event);

var dataSubjectId = value.ToString();

return dataSubjectId;

}Store Private Encryption Keys

We are storing private encryption keys associated to their data subject Id so that the keys can be generated, retrieved, persisted and deleted if desired.

Create a wrapper for an encryption key that contains the key itself and an initialization vector to be used once when generating the key.

public class EncryptionKey

{

public byte[] Key { get; }

public byte[] Nonce { get; }

public EncryptionKey(

byte[] key,

byte[] nonce)

{

Key = key;

Nonce = nonce;

}

}Now let's create a repository to store encryption keys. This can be as sophisticated as we need, but for our sample it's enough using an in-memory storage. The following CryptoRepository stores encryption keys in a dictionary where the key is the data subject Id that identifies a person. It should have a singleton life cycle because the dictionary is shared for every use case.

public class CryptoRepository

{

private readonly IDictionary<string, EncryptionKey> _cryptoStore;

public CryptoRepository()

{

_cryptoStore = new Dictionary<string, EncryptionKey>();

}

public EncryptionKey GetExistingOrNew(string id, Func<EncryptionKey> keyGenerator)

{

var isExisting = _cryptoStore.TryGetValue(id, out var keyStored);

if (isExisting)

{

return keyStored;

}

var newEncryptionKey = keyGenerator.Invoke();

_cryptoStore.Add(id, newEncryptionKey);

return newEncryptionKey;

}

public EncryptionKey GetExistingOrDefault(string id)

{

var isExisting = _cryptoStore.TryGetValue(id, out var keyStored);

if (isExisting)

{

return keyStored;

}

return default;

}

}As you can see, it allows adding and retrieving encryption keys, and generating them when needed.

Get Rid of the Private Encryption Key when Desired

Whenever we want to forget a data subject Id and make its personal data illegible we need a way to delete its associated encryption key. Simply add the following method to the CryptoRepository.

public void DeleteEncryptionKey(string id)

{

_cryptoStore.Remove(id);

}As mentioned earlier, the idea is that when an event with data encrypted is retrieved from the event store, it will have its sensitive data decrypted only if there is still an encryption key available. If the encryption key does not exist anymore it's because the subject had exercised its right to be forgotten.

Cryptographic Algorithm to use when Encrypting and Decrypting

We will be using the symmetryc AES algorithm due to its strength.

I won't go into deep cryptographic details here, but basically we need to be able to create an encryptor and decryptor from a given private key and an initialization vector. To do so, in C# there is a class called Aes. We can build around it some functionality capable of accessing the previous repository and capable of generating an encryptor or decryptor from an existing or new encryption key.

public class EncryptorDecryptor

{

private readonly CryptoRepository _cryptoRepository;

public EncryptorDecryptor(CryptoRepository cryptoRepository)

{

_cryptoRepository = cryptoRepository;

}

public ICryptoTransform GetEncryptor(string dataSubjectId)

{

var encryptionKey = _cryptoRepository.GetExistingOrNew(dataSubjectId, CreateNewEncryptionKey);

var aes = GetAes(encryptionKey);

var encryptor = aes.CreateEncryptor();

return encryptor;

}

public ICryptoTransform GetDecryptor(string dataSubjectId)

{

var encryptionKey = _cryptoRepository.GetExistingOrDefault(dataSubjectId);

if (encryptionKey is null)

{

// encryption key was deleted

return default;

}

var aes = GetAes(encryptionKey);

var decryptor = aes.CreateDecryptor();

return decryptor;

}

private EncryptionKey CreateNewEncryptionKey()

{

var aes = Aes.Create();

aes.Padding = PaddingMode.PKCS7;

var key = aes.Key;

var nonce = aes.IV;

var encryptionKey = new EncryptionKey(key, nonce);

return encryptionKey;

}

private Aes GetAes(EncryptionKey encryptionKey)

{

var aes = Aes.Create();

aes.Padding = PaddingMode.PKCS7;

aes.Key = encryptionKey.Key;

aes.IV = encryptionKey.Nonce;

return aes;

}

}Encrypt Text and Other Data Types

Anything that can be represented with text or an array of bytes can be encrypted.

In .NET any object can be represented by text when calling the method ToString() on it. That means that we can transform a number into text (e.g: 123 is "123"), any Guid (e.g: 00000001-0000-0000-0000-000000000000 is "00000001-0000-0000-0000-000000000000"), any boolean (e.g: true is "True"), or any date (e.g: DateTime.UtcNow is "5/13/2020 5:45:42 PM"), etc. The string is already text, and null references don't need encryption.

We don't care about complex objects because underneath they're all formed of these kinds of simple field data types, and that's certainly convenient as we can just focus on the most granular properties.

Add the following class with an encryption mechanism to a class FieldEncryptionDecryption. Later on we'll enhance it with a decryption method.

public class FieldEncryptionDecryption

{

public object GetEncryptedOrDefault(object value, ICryptoTransform encryptor)

{

if (encryptor is null)

{

throw new ArgumentNullException(nameof(encryptor));

}

var isEncryptionNeeded = value != null;

if (isEncryptionNeeded)

{

using var memoryStream = new MemoryStream();

using var cryptoStream = new CryptoStream(memoryStream, encryptor, CryptoStreamMode.Write);

using var writer = new StreamWriter(cryptoStream);

var valueAsText = value.ToString();

writer.Write(valueAsText);

writer.Flush();

cryptoStream.FlushFinalBlock();

var encryptedData = memoryStream.ToArray();

var encryptedText = Convert.ToBase64String(encryptedData);

return encryptedText;

}

return default;

}

}The idea is that every field marked with the [PersonalData] attribute goes through this function where it's converted to text and encrypted using the given encryptor.

Since Json is a weakly typed format, any key can have its value as a string, even if it's a number or a date. That's why we convert any encrypted value to its base 64 representation text.

Upstream Serialization with an Encryption Mechanism

We have reached the core of the solution. Now we need a way, given an event that is going to be stored, to serialize it in a way where its personal data information is encrypted and associated to a subject id for when it needs to be decrypted.

Summarizing, the upstream process is the following:

- An event is to be stored

- Find out whether it contains personal data to be encrypted and who owns this data

- Retrieve an existing encryption key for the data subject or create a new one if the person was not yet in the system

- Rely on the json serialization mechanism to encrypt each property marked with the

[PersonalData]attribute using the above field encryption functionality.

First of all, for this solution I am using Newtonsoft, a well-known and efficient .NET Json serializer library. With it, we can achieve some very useful things, such as customizing how every specific data type and value is serialized. I'll show you how to access this functionality and decide upon each field.

There are a lot of pieces here, but bear with me and we'll go through them step by step.

There is a concept for this library called contract resolvers to define the behaviour when writing to and reading from Json. If nothing is specified it uses a default contract resolver, but in our case we want to customize the serialization to step into the Json writing process.

Create the following:

public class SerializationContractResolver

: DefaultContractResolver

{

private readonly ICryptoTransform _encryptor;

private readonly FieldEncryptionDecryption _fieldEncryptionDecryption;

public SerializationContractResolver(

ICryptoTransform encryptor,

FieldEncryptionDecryption fieldEncryptionDecryption)

{

_encryptor = encryptor;

_fieldEncryptionDecryption = fieldEncryptionDecryption;

NamingStrategy = new CamelCaseNamingStrategy();

}

protected override IList<JsonProperty> CreateProperties(Type type, MemberSerialization memberSerialization)

{

var properties = base.CreateProperties(type, memberSerialization);

foreach (var jsonProperty in properties)

{

var isPersonalIdentifiableInformation = IsPersonalIdentifiableInformation(type, jsonProperty);

if (isPersonalIdentifiableInformation)

{

var serializationJsonConverter = new EncryptionJsonConverter(_encryptor, _fieldEncryptionDecryption);

jsonProperty.Converter = serializationJsonConverter;

}

}

return properties;

}

private bool IsPersonalIdentifiableInformation(Type type, JsonProperty jsonProperty)

{

var propertyInfo = type.GetProperty(jsonProperty.UnderlyingName);

if (propertyInfo is null)

{

return false;

}

var hasPersonalDataAttribute =

propertyInfo.CustomAttributes

.Any(x => x.AttributeType == typeof(PersonalDataAttribute));

var propertyType = propertyInfo.PropertyType;

var isSimpleValue = propertyType.IsValueType || propertyType == typeof(string);

var isSupportedPersonalIdentifiableInformation = isSimpleValue && hasPersonalDataAttribute;

return isSupportedPersonalIdentifiableInformation;

}

}This custom contract resolver receives the encryptor and an instance of the FieldEncryptionDecryption. It kicks off when an object is being serialized into Json and it checks each property that is of string or of another type that can be encrypted to find out whether it carries the [PersonalData] attribute. If it does, it instantiates a custom JsonConverter called EncryptionJsonConverter. Then implement it as follows.

public class EncryptionJsonConverter

: JsonConverter

{

private readonly ICryptoTransform _encryptor;

private readonly FieldEncryptionDecryption _fieldEncryptionDecryption;

public EncryptionJsonConverter(

ICryptoTransform encryptor,

FieldEncryptionDecryption fieldEncryptionDecryption)

{

_encryptor = encryptor;

_fieldEncryptionDecryption = fieldEncryptionDecryption;

}

public override void WriteJson(JsonWriter writer, object value, Newtonsoft.Json.JsonSerializer serializer)

{

var result = _fieldEncryptionDecryption.GetEncryptedOrDefault(value, _encryptor);

writer.WriteValue(result);

}

public override object ReadJson(JsonReader reader, Type objectType, object existingValue, Newtonsoft.Json.JsonSerializer serializer)

{

throw new NotImplementedException();

}

public override bool CanConvert(Type objectType)

{

return true;

}

public override bool CanRead => false;

public override bool CanWrite => true;

}This custom converter uses the FieldEncryptionDecryption to obtain the encrypted value of a field that had been marked as [PersonalData]. For that it needs the encryptor instance.

Newtonsoft has a basic mechanism to serialize an object to json and viceversa:

JsonConvert.SerializeObject(myObject, serializerSettings)would convert myObject to a string using provided settings.JsonConvert.DeserializeObject(myJson, myEventType, deserializerSettings)would convert myJson to an instance of the specified type using provided settings.

We now need something capable of providing the serializer settings that use the custom contract resolver. We'll enhance this class with deserializer settings later on.

public class JsonSerializerSettingsFactory

{

private readonly EncryptorDecryptor _encryptorDecryptor;

public JsonSerializerSettingsFactory(EncryptorDecryptor encryptorDecryptor)

{

_encryptorDecryptor = encryptorDecryptor;

}

public JsonSerializerSettings CreateDefault()

{

var defaultContractResolver = new CamelCasePropertyNamesContractResolver();

var defaultSettings = GetSettings(defaultContractResolver);

return defaultSettings;

}

public JsonSerializerSettings CreateForEncryption(string dataSubjectId)

{

var encryptor = _encryptorDecryptor.GetEncryptor(dataSubjectId);

var fieldEncryptionDecryption = new FieldEncryptionDecryption();

var serializationContractResolver =

new SerializationContractResolver(encryptor, fieldEncryptionDecryption);

var jsonSerializerSettings = GetSettings(serializationContractResolver);

return jsonSerializerSettings;

}

private JsonSerializerSettings GetSettings(IContractResolver contractResolver)

{

var settings =

new JsonSerializerSettings

{

ContractResolver = contractResolver

};

return settings;

}

}This factory creates a JsonSerializerSettings for different purposes. When encryption is required and a data subject Id is provided, it creates settings for the SerializationContractResolver described before. When encryption is not needed, it creates some default settings that use camel case formatting for Json.

Let's create a wrapper for a serialized event that stores the event itself as an array of bytes and some metadata that will come handy for the deserialization process later on.

public class SerializedEvent

{

public byte[] Data { get; }

public byte[] MetaData { get; }

public bool IsJson { get; }

public SerializedEvent(byte[] data, byte[] metaData, bool isJson)

{

Data = data;

MetaData = metaData;

IsJson = isJson;

}

}Now create the functionality that returns serialized data and metadata for a given event that is going to be persisted.

public class JsonSerializer

{

private const string MetadataSubjectIdKey = "dataSubjectId";

private readonly JsonSerializerSettingsFactory _jsonSerializerSettingsFactory;

private readonly IEnumerable<Type> _supportedEvents;

public JsonSerializer(

JsonSerializerSettingsFactory jsonSerializerSettingsFactory,

IEnumerable<Type> supportedEvents)

{

_jsonSerializerSettingsFactory = jsonSerializerSettingsFactory;

_supportedEvents = supportedEvents;

}

public SerializedEvent Serialize(IEvent @event)

{

var dataSubjectId = GetDataSubjectId(@event);

var metadataValues =

new Dictionary<string, string>

{

{MetadataSubjectIdKey, dataSubjectId}

};

var hasPersonalData = dataSubjectId != null;

var dataJsonSerializerSettings =

hasPersonalData

? _jsonSerializerSettingsFactory.CreateForEncryption(dataSubjectId)

: _jsonSerializerSettingsFactory.CreateDefault();

var dataJson = JsonConvert.SerializeObject(@event, dataJsonSerializerSettings);

var dataBytes = Encoding.UTF8.GetBytes(dataJson);

var defaultJsonSettings = _jsonSerializerSettingsFactory.CreateDefault();

var metadataJson = JsonConvert.SerializeObject(metadataValues, defaultJsonSettings);

var metadataBytes = Encoding.UTF8.GetBytes(metadataJson);

var serializedEvent = new SerializedEvent(dataBytes, metadataBytes, true);

return serializedEvent;

}

private string GetDataSubjectId(IEvent @event)

{

var eventType = @event.GetType();

var properties = eventType.GetProperties();

var dataSubjectIdPropertyInfo =

properties

.FirstOrDefault(x => x.GetCustomAttributes(typeof(DataSubjectIdAttribute), false)

.Any(y => y is DataSubjectIdAttribute));

if (dataSubjectIdPropertyInfo is null)

{

return null;

}

var value = dataSubjectIdPropertyInfo.GetValue(@event);

var dataSubjectId = value.ToString();

return dataSubjectId;

}

}Remember the GetDataSubjectId created at the beginning of the article? This class is a good place to contain it. There is also the Serialize method that will accept an event, check if it has sensitive information to encrypt and use the proper serializer settings with or without encryption capabilities to generate the Json for data and metadata and transform them into an array of bytes.

The result of this method is what should be stored in the event store.

Decrypt Text and Masking Mechanism when it Cannot be Decrypted

That was long! We're now half way there and I hope that by now, you are already guessing what the downstream deserialization with the decryption mechanism will look like.

But first we need to figure out what happens when an encrypted event is retrieved and there is no encryption key available to decrypt its fields. It could be, as expected, that a user decided to be forgotten and the key was deleted. We will have a Json with encrypted values that needs to be deserialized into a specific object. But this destination object may expect dates, or numbers, or Guid, not a bunch of illegible characters!

Somehow we must take into consideration two important things:

- Which text is 'normal' text that must be left as it is, and which one is encrypted data that has to be decrypted

- How to handle encrypted data that cannot be decrypted

- How to optionally mask text that cannot be decrypted so that it looks nicer.

Go to the FieldEncryptionDecryption created before and replace it with this:

public class FieldEncryptionDecryption

{

private const string EncryptionPrefix = "crypto.";

public object GetEncryptedOrDefault(object value, ICryptoTransform encryptor)

{

if (encryptor is null)

{

throw new ArgumentNullException(nameof(encryptor));

}

var isEncryptionNeeded = value != null;

if (isEncryptionNeeded)

{

using var memoryStream = new MemoryStream();

using var cryptoStream = new CryptoStream(memoryStream, encryptor, CryptoStreamMode.Write);

using var writer = new StreamWriter(cryptoStream);

var valueAsText = value.ToString();

writer.Write(valueAsText);

writer.Flush();

cryptoStream.FlushFinalBlock();

var encryptedData = memoryStream.ToArray();

var encryptedText = Convert.ToBase64String(encryptedData);

var result = $"{EncryptionPrefix}{encryptedText}";

return result;

}

return default;

}

public object GetDecryptedOrDefault(object value, ICryptoTransform decryptor, Type destinationType)

{

if (value is null)

{

throw new ArgumentNullException(nameof(value));

}

var isText = value is string;

if (isText)

{

var valueAsText = (string)value;

var isEncrypted = valueAsText.StartsWith(EncryptionPrefix);

if (isEncrypted)

{

var isDecryptorAvailable = decryptor != null;

if (isDecryptorAvailable)

{

var startIndex = EncryptionPrefix.Length;

var valueWithoutPrefix = valueAsText.Substring(startIndex);

var encryptedValue = Convert.FromBase64String(valueWithoutPrefix);

using var memoryStream = new MemoryStream(encryptedValue);

using var cryptoStream = new CryptoStream(memoryStream, decryptor, CryptoStreamMode.Read);

using var reader = new StreamReader(cryptoStream);

var decryptedText = reader.ReadToEnd();

var result = Parse(destinationType, decryptedText);

return result;

}

var maskedValue = GetMaskedValue(destinationType);

return maskedValue;

}

var valueParsed = Parse(destinationType, valueAsText);

return valueParsed;

}

return value;

}

private object Parse(Type outputType, string valueAsString)

{

var converter = TypeDescriptor.GetConverter(outputType);

var result = converter.ConvertFromString(valueAsString);

return result;

}

private object GetMaskedValue(Type destinationType)

{

if (destinationType == typeof(string))

{

const string templateText = "***";

return templateText;

}

var defaultValue = Activator.CreateInstance(destinationType);

return defaultValue;

}

}Notice there is a constant declared .crypto that will be used as a prefix for every encrypted value. With that prefix we are able to differentiate which text contains encrypted data and which text contains text that is not encrypted.

We could have gone with a more restrictive approach and force the stored Json events to be deserialized into the original classes that represent the events and contains the [PersonalData] and [DataSubjectId] attributes, but by using a prefix .crypto to clearly identified the encrypted values, there is an additional flexibility that will allow us to deserialize Json into whichever class as long as the Json's keys find a matching property. This is an extremely powerful feature that would fit perfectly with some weak-schema versioning approach in event-sourced systems.

The GetDecryptedOrDefault method will attempt to decrypt the value if the encryption key exists. If it's not, it will use the default value for the data type (e.g: 0 for numbers, DateTime.MinValue for dates, empty string for Guid, etc.) and a masked string of *** when the data type is a string.

Downstream Deserialization with a Decryption Mechanism

We had created a custom serialization contract resolved for Newtonsoft. Let's create now its equivalent for deserialization.

public class DeserializationContractResolver

: DefaultContractResolver

{

private readonly ICryptoTransform _decryptor;

private readonly FieldEncryptionDecryption _fieldEncryptionDecryption;

public DeserializationContractResolver(

ICryptoTransform decryptor,

FieldEncryptionDecryption fieldEncryptionDecryption)

{

_decryptor = decryptor;

_fieldEncryptionDecryption = fieldEncryptionDecryption;

NamingStrategy = new CamelCaseNamingStrategy();

}

protected override IList<JsonProperty> CreateProperties(Type type, MemberSerialization memberSerialization)

{

var properties = base.CreateProperties(type, memberSerialization);

foreach (var jsonProperty in properties)

{

var isSimpleValue = IsSimpleValue(type, jsonProperty);

if (isSimpleValue)

{

var jsonConverter = new DecryptionJsonConverter(_decryptor, _fieldEncryptionDecryption);

jsonProperty.Converter = jsonConverter;

}

}

return properties;

}

private bool IsSimpleValue(Type type, JsonProperty jsonProperty)

{

var propertyInfo = type.GetProperty(jsonProperty.UnderlyingName);

if (propertyInfo is null)

{

return false;

}

var propertyType = propertyInfo.PropertyType;

var isSimpleValue = propertyType.IsValueType || propertyType == typeof(string);

return isSimpleValue;

}

}When it detects that a destination property is a simple value type or string likely to be encrypted, it instantiates another custom JsonConverter that uses the decryption functionality.

public class DecryptionJsonConverter

: JsonConverter

{

private readonly ICryptoTransform _decryptor;

private readonly FieldEncryptionDecryption _fieldEncryptionDecryption;

public DecryptionJsonConverter(

ICryptoTransform decryptor,

FieldEncryptionDecryption fieldEncryptionService)

{

_decryptor = decryptor;

_fieldEncryptionDecryption = fieldEncryptionService;

}

public override void WriteJson(JsonWriter writer, object value, Newtonsoft.Json.JsonSerializer serializer)

{

throw new NotImplementedException();

}

public override object ReadJson(JsonReader reader, Type objectType, object existingValue, Newtonsoft.Json.JsonSerializer serializer)

{

var value = reader.Value;

var result = _fieldEncryptionDecryption.GetDecryptedOrDefault(value, _decryptor, objectType);

return result;

}

public override bool CanConvert(Type objectType)

{

return true;

}

public override bool CanRead => true;

public override bool CanWrite => false;

}As previously, we need to have a way to return JsonSerializerSettings to use the above contract resolver and Json converter when deserializing. Add the following method to JsonSerializerSettingsFactory.

public JsonSerializerSettings CreateForDecryption(string dataSubjectId)

{

var decryptor = _encryptorDecryptor.GetDecryptor(dataSubjectId);

var fieldEncryptionDecryption = new FieldEncryptionDecryption();

var deserializationContractResolver =

new DeserializationContractResolver(decryptor, fieldEncryptionDecryption);

var jsonDeserializerSettings = GetSettings(deserializationContractResolver);

return jsonDeserializerSettings;

}And now add the following method to JsonSerializer.

public IEvent Deserialize(ReadOnlyMemory<byte> data, ReadOnlyMemory<byte> metadata, string eventName)

{

var metadataJson = Encoding.UTF8.GetString(metadata.Span);

var defaultJsonSettings = _jsonSerializerSettingsFactory.CreateDefault();

var values =

JsonConvert.DeserializeObject<IDictionary<string, string>>(metadataJson, defaultJsonSettings);

var hasKey = values.TryGetValue(MetadataSubjectIdKey, out var dataSubjectId);

var hasPersonalData = hasKey && !string.IsNullOrEmpty(dataSubjectId);

var dataJsonDeserializerSettings =

hasPersonalData

? _jsonSerializerSettingsFactory.CreateForDecryption(dataSubjectId)

: _jsonSerializerSettingsFactory.CreateDefault();

var eventType = _supportedEvents.Single(x => x.Name == eventName);

var dataJson = Encoding.UTF8.GetString(data.Span);

var persistableEvent =

JsonConvert.DeserializeObject(dataJson, eventType, dataJsonDeserializerSettings);

return (IEvent)persistableEvent;

}The process is the opposite as before. This time given an event name, an array of bytes representing data and another with the metadata, it checks in the metadata whether the event has any value for the data subject Id informing that the event contains encrypted data. It retrieves the proper json settings with or without decryption capabilities, and deserializes the data into the appropriate type.

Notice that we had not used the supportedEvents when serializing. This is an enumerable of all the events that the application supports. By providing this list we allow our solution to be more generic as it will be capable of deserializing any given event type, even if they are not the original classes used when serializing or are in a different namespace, as long as the class name matches the event name. As previously mentioned, this is a handy feature when using a weak-schema versioning approach.

Wire up Together with an EventStoreDB gRPC Client

So far we have a solution to serialize and deserialize events capable of encrypting and decrypting values. Let's see how everything fits together with EventStoreDB.

We are going to need a mapper to transform our IEvent to the format that EventStoreDB needs EventData, and another to map a ResolvedEvent to an IEvent using the JsonSerializer we created before.

public class EventConverter

{

private readonly JsonSerializer _jsonSerializer;

public EventConverter(JsonSerializer jsonSerializer)

{

_jsonSerializer = jsonSerializer;

}

public IEvent ToEvent(ResolvedEvent resolvedEvent)

{

var data = resolvedEvent.Event.Data;

var metadata = resolvedEvent.Event.Metadata;

var eventName = resolvedEvent.Event.EventType;

var persistableEvent = _jsonSerializer.Deserialize(data, metadata, eventName);

return persistableEvent;

}

public EventData ToEventData(IEvent @event)

{

var eventTypeName = @event.GetType().Name;

var id = Uuid.NewUuid();

var serializedEvent = _jsonSerializer.Serialize(@event);

var contentType = serializedEvent.IsJson ? "application/json" : "application/octet-stream";

var data = serializedEvent.Data;

var metadata = serializedEvent.MetaData;

var eventData = new EventData(id, eventTypeName,data, metadata, contentType);

return eventData;

}

}Finally, let's create a wrapper capable of using an EventStoreDB gRPC client. Add a dependency to the EventStore.Client nuGet library and create the following class.

public class EventStore

{

private readonly EventStoreClient _eventStoreClient;

private readonly EventConverter _eventConverter;

public EventStore(

EventStoreClient eventStoreClient,

EventConverter eventConverter)

{

_eventStoreClient = eventStoreClient;

_eventConverter = eventConverter;

}

public async Task PersistEvents(string streamName, int aggregateVersion, IEnumerable<IEvent> eventsToPersist)

{

var events = eventsToPersist.ToList();

var count = events.Count;

if (count == 0)

{

return;

}

var expectedRevision = GetExpectedRevision(aggregateVersion, count);

var eventsData =

events.Select(x => _eventConverter.ToEventData(x));

if (expectedRevision == null)

await _eventStoreClient.AppendToStreamAsync(streamName, StreamState.NoStream, eventsData);

else

await _eventStoreClient.AppendToStreamAsync(streamName, expectedRevision.Value, eventsData);

}

public async Task<IEnumerable<IEvent>> GetEvents(string streamName)

{

const int start = 0;

const int count = 4096;

const bool resolveLinkTos = false;

var sliceEvents =

_eventStoreClient.ReadStreamAsync(Direction.Forwards, streamName, start, count, resolveLinkTos: resolveLinkTos);

var resolvedEvents = await sliceEvents.ToListAsync();

var events =

resolvedEvents.Select(x => _eventConverter.ToEvent(x));

return events;

}

private StreamRevision? GetExpectedRevision(int aggregateVersion, int numberOfEvents)

{

var originalVersion = aggregateVersion - numberOfEvents;

var expectedVersion = originalVersion != 0

? StreamRevision.FromInt64(originalVersion - 1)

: (StreamRevision?)null;

return expectedVersion;

}

}This wrapper needs an active EventStoreDB connection injected that will be used to persist and get events. For both upstream and downstream processes, the EventConverter is used.

Test Everything with an Event Store

If you have docker and docker-compose installed, you can easily spin up a docker container with EventStoreDB. Create an eventstore.yml file with the following content:

version: "3"

services:

eventstore.db:

image: eventstore/eventstore:21.6.0-buster-slim

environment:

- EVENTSTORE_CLUSTER_SIZE=1

- EVENTSTORE_RUN_PROJECTIONS=All

- EVENTSTORE_START_STANDARD_PROJECTIONS=true

- EVENTSTORE_EXT_TCP_PORT=1113

- EVENTSTORE_HTTP_PORT=2113

- EVENTSTORE_INSECURE=true

- EVENTSTORE_ENABLE_EXTERNAL_TCP=true

- EVENTSTORE_ENABLE_ATOM_PUB_OVER_HTTP=true

ports:

- '1113:1113'

- '2113:2113'

volumes:

- type: volume

source: eventstore-volume-data

target: /var/lib/eventstore

- type: volume

source: eventstore-volume-logs

target: /var/log/eventstore

networks:

- eventstore.db

networks:

eventstore.db:

driver: bridge

volumes:

eventstore-volume-data:

eventstore-volume-logs:Notice that we're using the latest stable EventStoreDB currently available with an in-memory mode that does not persist streams in disk because it's just for testing purpose.

Run the following:

docker-compose -f eventstore.yml upand there will be an event store accessible with the following connection string:

esdb://localhost:2113?tls=falseA good, deterministic and readable way to test all the code is to create the following integration test. It uses xUnit and FluentAssertions libraries, and the scenario is the following.

- Instantiate the event store wrapper that uses all the functionality created

- Start a connection with the EventStoreDB docker container that should be running

- Create an event

ContactBookCreatedwithout personal data events - Created two events

ContactAddedfor different subject Id - Delete the encryption key used to encrypt the personal data in one of the

ContactAddedevents.

The functionality to test is to retrieve the events for the given stream, and the assertions are to validate that there are three ordered events properly deserialized where the ContactAdded that belongs to the subject that lost the encryption key has illegible personal details.

public static class GetEventsTests

{

public class Given_A_ContactBookCreated_And_Two_Events_With_Personal_Data_Stored_And_Encryption_Key_For_One_Is_Deleted_When_Getting_Events

: Given_WhenAsync_Then_Test

{

private EventStore _sut;

private EventStoreClient _eventStoreClient;

private string _streamName;

private Guid _joeId;

private Guid _janeId;

private ContactAdded _expectedContactAddedOne;

private ContactAdded _expectedContactAddedTwo;

private IEnumerable<IEvent> _result;

protected override async Task Given()

{

const string connectionString = "esdb://localhost:2113?tls=false";

_eventStoreClient = new EventStoreClient(EventStoreClientSettings.Create(connectionString));

var supportedEvents =

new List<Type>

{

typeof(ContactBookCreated),

typeof(ContactAdded)

};

var cryptoRepository = new CryptoRepository();

var encryptorDecryptor = new EncryptorDecryptor(cryptoRepository);

var jsonSerializerSettingsFactory = new JsonSerializerSettingsFactory(encryptorDecryptor);

var jsonSerializer = new JsonSerializer(jsonSerializerSettingsFactory, supportedEvents);

var eventConverter = new EventConverter(jsonSerializer);

var aggregateId = Guid.NewGuid();

_streamName = $"ContactBook-{aggregateId.ToString().Replace("-", string.Empty)}";

_sut = new EventStore(_eventStoreClient, eventConverter);

var contactBookCreated =

new ContactBookCreated

{

AggregateId = aggregateId

};

_joeId = Guid.NewGuid();

var contactAddedOne =

new ContactAdded

{

AggregateId = aggregateId,

Name = "Joe Bloggs",

Birthday = new DateTime(1984, 1, 1, 0, 0, 0, DateTimeKind.Utc),

PersonId = _joeId,

Address =

new Address

{

Street = "Blue Avenue",

Number = 23,

CountryCode = "ES"

}

};

_janeId = Guid.NewGuid();

var contactAddedTwo =

new ContactAdded

{

AggregateId = aggregateId,

Name = "Jane Bloggs",

Birthday = new DateTime(1987, 12, 31, 0, 0, 0, DateTimeKind.Utc),

PersonId = _janeId,

Address =

new Address

{

Street = "Pink Avenue",

Number = 33,

CountryCode = "ES"

}

};

var eventsToPersist =

new List<IEvent>

{

contactBookCreated,

contactAddedOne,

contactAddedTwo

};

var aggregateVersion = eventsToPersist.Count;

await _sut.PersistEvents(_streamName, aggregateVersion, eventsToPersist);

cryptoRepository.DeleteEncryptionKey(_janeId.ToString());

_expectedContactAddedOne = contactAddedOne;

_expectedContactAddedTwo =

new ContactAdded

{

AggregateId = aggregateId,

Name = "***",

Birthday = default,

PersonId = _janeId,

Address =

new Address

{

Street = "***",

Number = default,

CountryCode = "ES"

}

};

}

protected override async Task When()

{

_result = await _sut.GetEvents(_streamName);

}

[Fact]

public void Then_It_Should_Retrieve_Three_Events()

{

_result.Should().HaveCount(3);

}

[Fact]

public void Then_It_Should_Have_Decrypted_The_First_ContactAdded_Event()

{

_result.ElementAt(1).Should().BeEquivalentTo(_expectedContactAddedOne);

}

[Fact]

public void Then_It_Should_Have_Decrypted_The_Second_ContactAdded_Event()

{

_result.ElementAt(2).Should().BeEquivalentTo(_expectedContactAddedTwo);

}

protected override void Cleanup()

{

_eventStoreClient.Dispose();

}

}

}

public class ContactAdded

: IEvent

{

public Guid AggregateId { get; set; }

[DataSubjectId]

public Guid PersonId { get; set; }

[PersonalData]

public string Name { get; set; }

[PersonalData]

public DateTime Birthday { get; set; }

public Address Address { get; set; } = new Address();

}

public class Address

{

[PersonalData]

public string Street { get; set; }

[PersonalData]

public int Number { get; set; }

public string CountryCode { get; set; }

}

public class ContactBookCreated

: IEvent

{

public Guid AggregateId { get; set; }

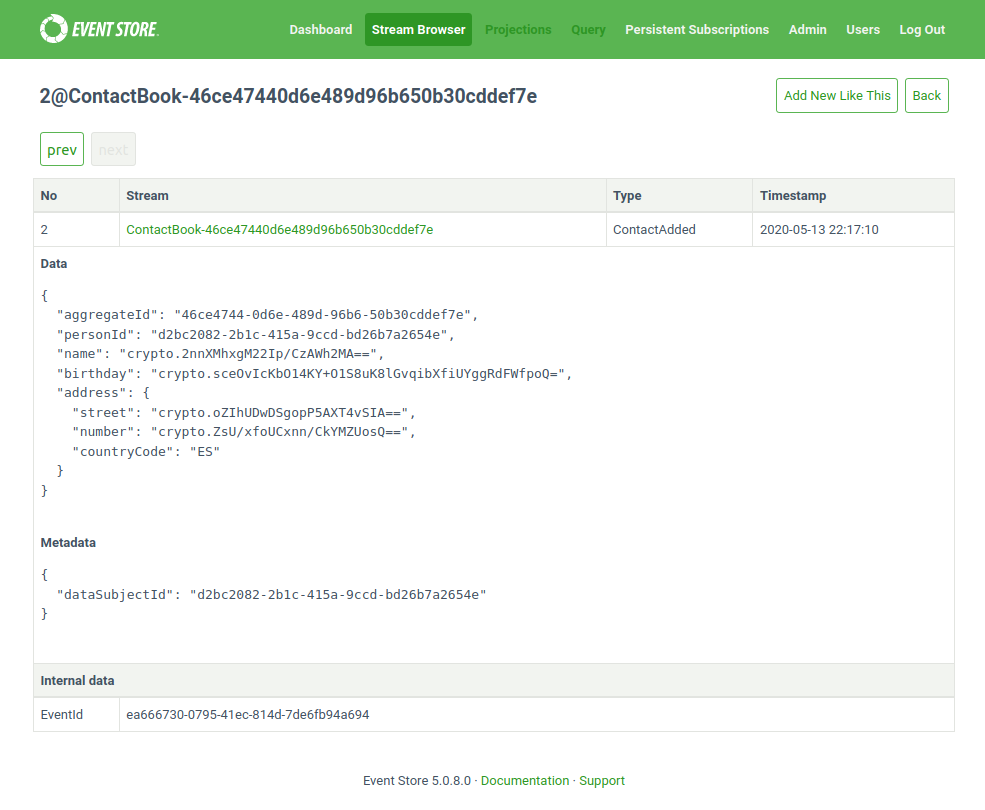

}If we had a look at how the events with encrypted data look like inside the EventStoreDB, here is an example:

Check also full source codes from this article in our samples repository